The Experience of an External QA Team Working on a Game: The SunStrike Studios Case Study

Anton Tatarinov and Ilya Zakharov from SunStrike shared their experience of setting up external testing with App2Top.

Anton Tatarinov, Head of QA, and Ilya Zakharov, QA Lead

The case we discuss in this article is absolutely real, as are the challenges we faced when setting up the processes. However, we won't be naming the company or the project due to NDA obligations.

Everything began when a well-known market company reached out to us at SunStrike. They needed our help to set up external QA and then test the project before its global launch.

Audit

Before starting work, we needed to conduct a review. Therefore, our QA lead was the first to join the project. He had three tasks to accomplish.

- Analyze the current state of the project at the levels of documentation, team, its workflow, and the tools being used.

- Develop and agree on workflow adjustments considering testing needs and project specifics.

- Establish communication channels for external testing with the development team.

Analyzing the existing workflow didn’t take long since the team was already complaining about certain aspects of task execution. We identified the following weaknesses:

- Poor detailing in task tracker cards for tasks and bugs, which led to confusion and sometimes to the loss of certain features and fixes;

- Insufficient documentation on the tool stack used in the project;

- Lack of a workflow for interaction between the development team and the testing team.

After considering feedback and conducting an audit, we set out to describe and correct the work process. It was decided to use the waterfall development model, which seemed most suitable to us.

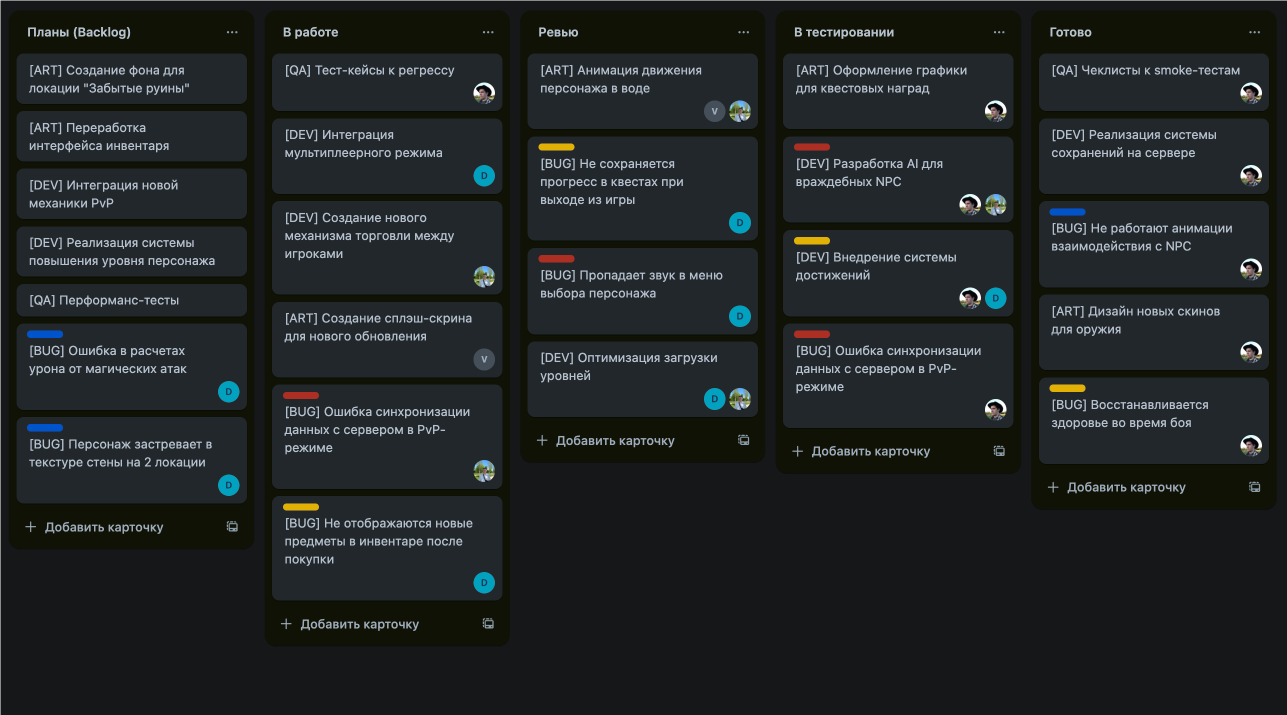

In our case, the waterfall consisted of several steps.

- When a task is created, it goes into the “Plans” column (essentially the backlog). At this stage, the task should contain brief information about what should be implemented and have a link to a specific design document page.

- Then, when the task is taken for work by a specialist (depending on who it's assigned to), it moves to the “In Progress” column.

- Upon completion, it enters the “Review” column, where department leads check the task for compliance of the final result with the original task description.

- Then, any completed feature is sent for testing, and accordingly, the task moves to the “Testing” column.

From there, a split occurs.

- If discrepancies or bugs are found in the task during testing, a ticket is created according to the agreed-upon procedure. This procedure must be followed by all team members if they choose to fix the error. The ticket goes into the “Plans” column and is assigned to the person who handled the original task. The task itself is flagged, indicating to team members that it awaits correction.

- If no errors are detected during testing, the task moves to the “Done” status.

Our kanban board for a similar project

Also, as part of adjusting the work process, we identified the problem of insufficient information on the project's stack. In simpler terms, there was no documentation on what tools were being used and how the development team worked with them. This was necessary for the QA team to have a general understanding of processes and to reduce future clarification times.

Regular Testing

After aligning changes to the workflow, it was time to set up the testing itself. We began with test planning, clarifying:

- What tasks await us;

- When and how to take them up;

- What activities to conduct and at what stages.

It was also necessary to develop requirements for bug prioritization and prepare report templates for bugs and features. Moreover, it was important to immediately highlight what we are not testing and why.

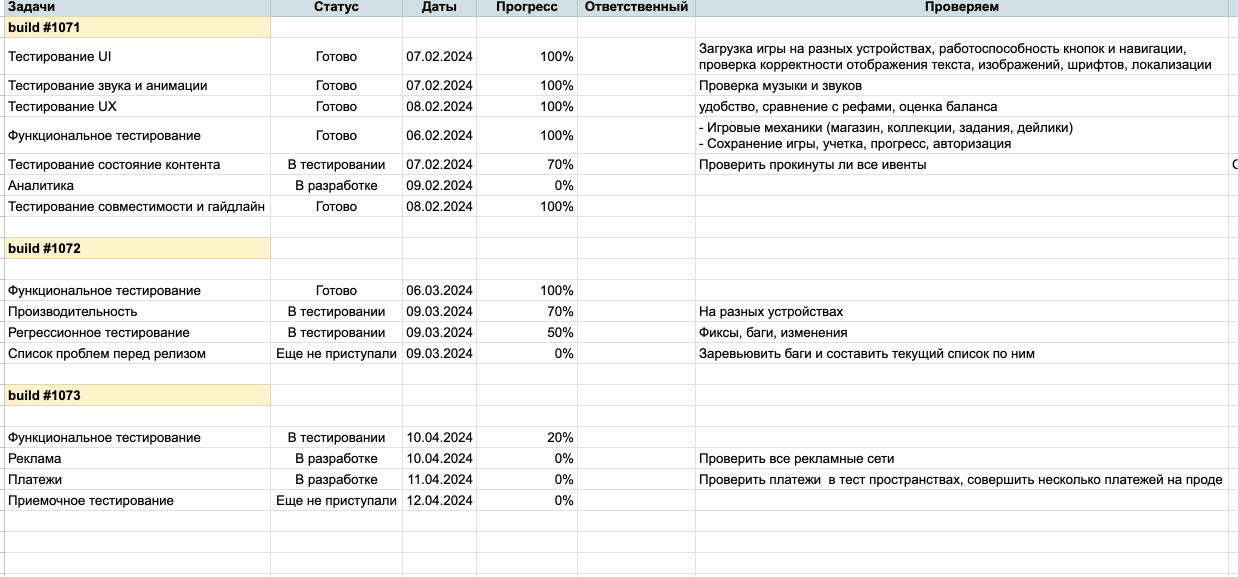

Example of a test plan

We then proceeded with the testing itself. We worked iteratively with newly arriving features and fixes for previously found bugs. The process was built on four activities.

- Short smoke tests lasting up to an hour. They are designed to identify critical issues before testing starts, allowing for early detection of blocking bugs.

- Functional testing. The test analysis and test design methods for each feature can differ. Regardless of the approach, it's crucial to prepare documentation and references for a feature before it reaches you in a build. Some features will be described with test cases, some will just require a checklist, and sometimes a simple table will suffice.

- Regression testing. We didn’t use it in every iteration. It depended on the volume of changes, given that such testing takes a lot of time. Importantly, regardless of how often you conduct it, the checklist should be updated in each iteration with every new feature or change. Otherwise, you might find that a previously prepared checklist or set of test cases becomes outdated right when you actually need it.

- Intuitive testing (Ad-hoc testing). With each new feature, it's better to spend any spare time simply playing the game rather than intentionally seeking bugs. Intuitive testing, where the tester acts as a user, often leads to changes in functionality or UI.

What Is Important to Test Before Release

As the project nears release, three additional types of testing become necessary:

- Network testing, if an online project is being launched;

- Compatibility testing;

- Performance testing.

Surprises can occur at any of these stages.

In our case, we only conducted compatibility and performance testing.

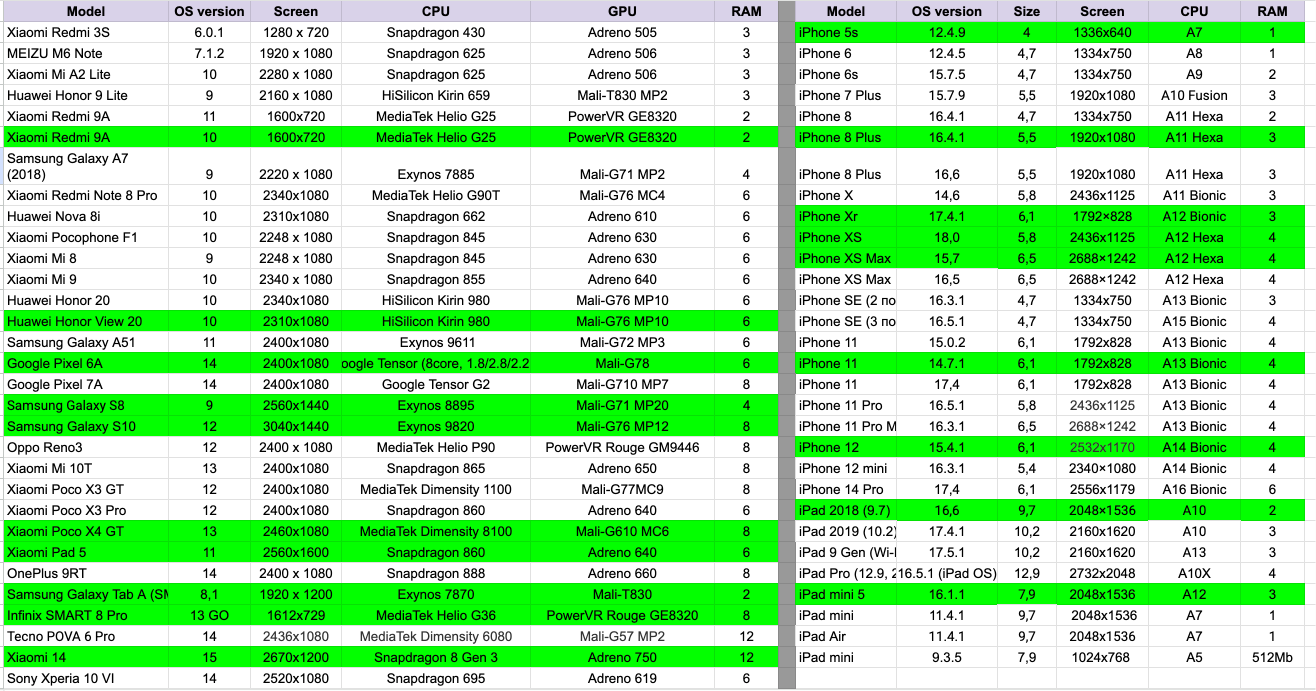

For compatibility testing, we checked various form factors. We tested functionality on a large number of popular devices with varying OS versions, interfaces (for Android), resolutions, aspect ratios, and hardware.

On each device, it’s crucial not only to launch the application but to run smoke tests, accessing all game functionalities, especially third-party SDKs and OS features.

At SunStrike, we have quite a wide range of devices, so this wasn’t an issue.

Device list for compatibility and performance tests

Performance testing followed a similar pattern. However, here we were more interested in CPU, GPU, and RAM specifics. Based on these parameters, we categorized devices into three performance levels—high, medium, and low.

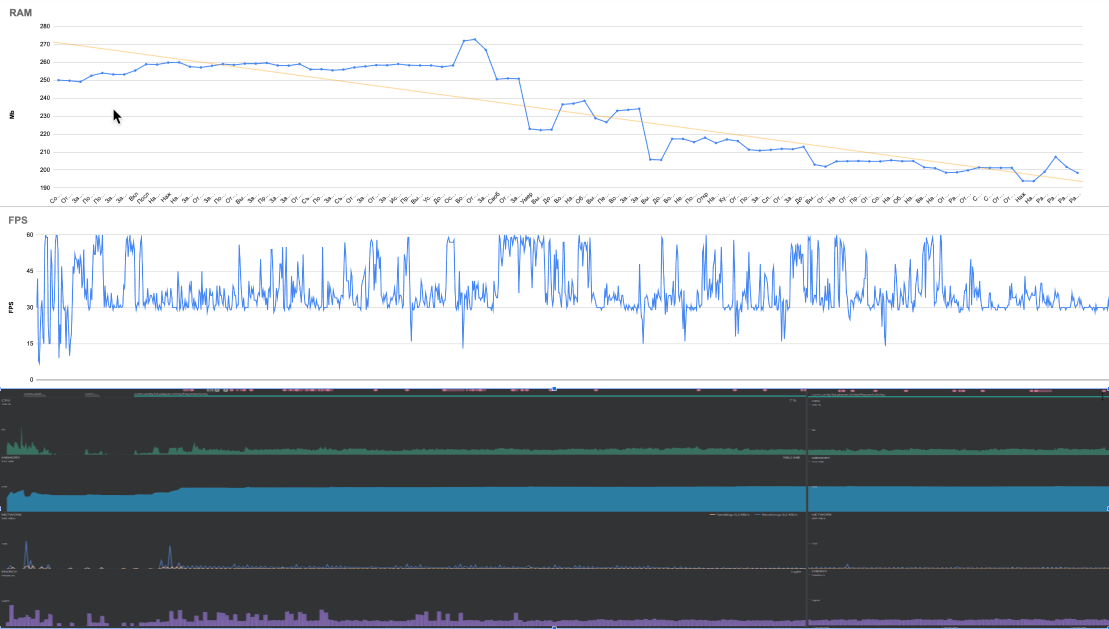

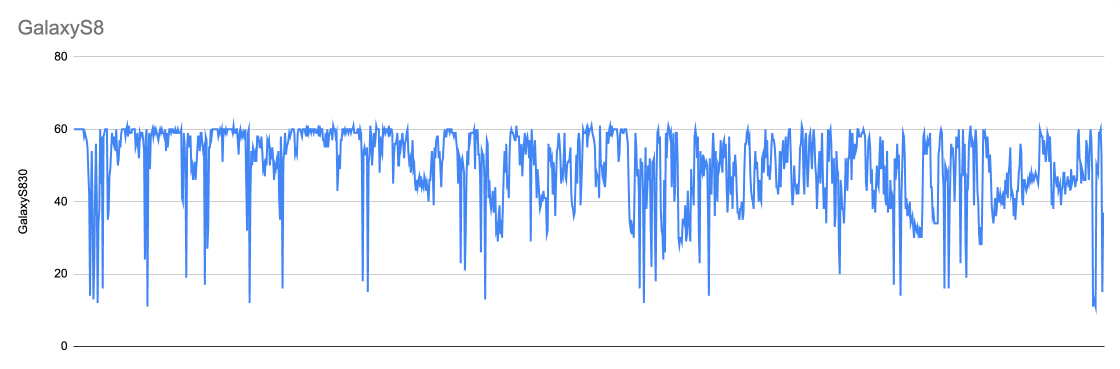

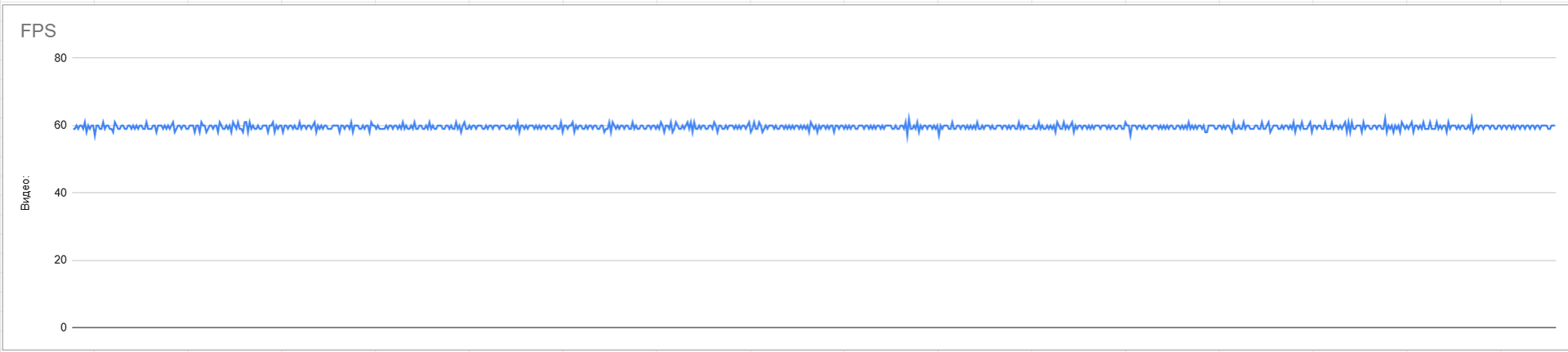

Game session performance test with RAM / FPS / network / CPU (FPS spikes detected)

Game session performance test with FPS (spikes detected)

Game session performance test with FPS (spikes detected)

Game session performance test with FPS (smooth with no spikes)

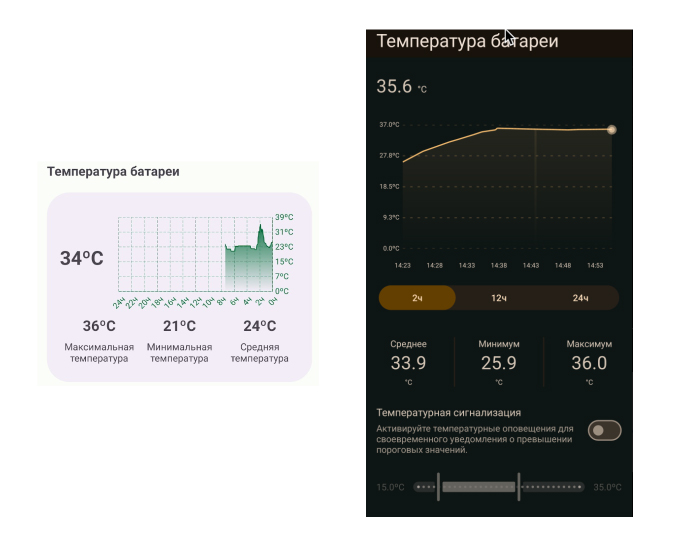

Generally, information about FPS and RAM behavior suffices, but occasionally we also measure energy consumption and heating if intuitive testing reveals uncomfortable playing conditions.

Temperature change graphs

We also had to conduct notification testing separately and encountered no surprises.

After dividing notifications into client-side and server-side and dealing with the developer’s tools, it was straightforward to check notifications for arrival, grouping, and disappearance according to design document parameters.

Yes, sometimes localization testing follows pre-launch (often concerning UI, like checking text display on buttons and in context menus in other languages). We didn't have this with our game; translation into other languages was not planned during our testing.

Soft Launch

Eventually, the development team grew. This sped up project development, also impacting testing team workloads. As a result, a full-fledged QA team needed to be assembled for the game.

Eventually, a middle-level developer and two junior developers joined the QA lead already working on the project. Post-onboarding, the team was prepared for the new work pace—just as the project was entering a soft launch.

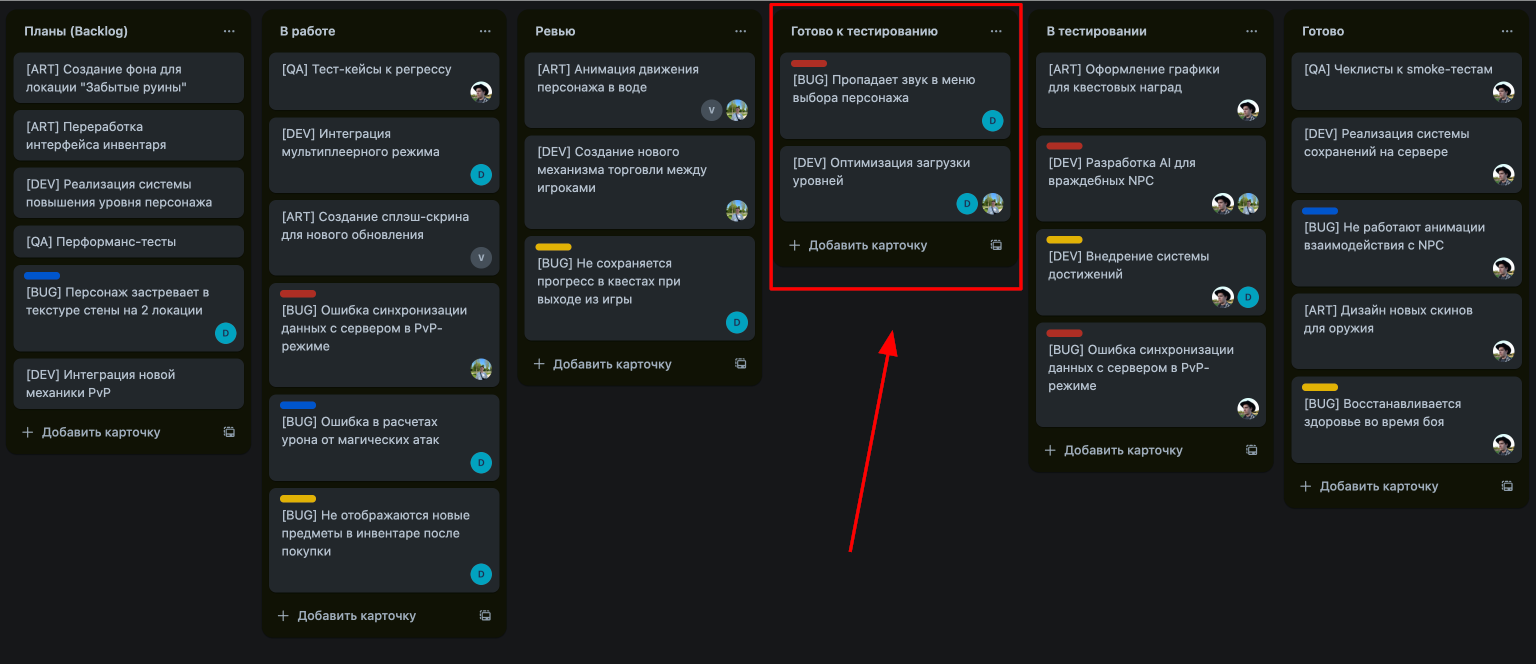

The release highlighted an issue in the previously established workflow. It became apparent that the development team couldn’t see which tasks QA was currently handling and which ones awaited action.

Instances occurred where developers moved tasks already under testing to incorrect columns, causing confusion and sometimes duplicating work.

After the soft launch, it was decided to make a minor change in ticket statuses and workflow. An additional column “Ready for Testing” was created for QA, along with new rules stating:

- Only QA could manipulate tasks and bugs within this column;

- When QA takes up a task, it transitions to the existing “In Testing” column on our Kanban board.

Kanban board with the new column

Additionally, rules for working with task or bug flags were updated.

For instance, if a medium-priority bug was on the task list at release time, the flag wasn’t set. However, such bugs were definitely mentioned in chats and calls, ensuring a joint decision on whether to release them or fix them before release. This ensured coordinated actions and saved the remaining pre-update time.

Not Everything Goes Smoothly

During the soft launch phase, the client company began enhancing functionality to make the game more appealing to players. At this phase, challenges did not spare the QA team. The game started experiencing noticeable FPS drops and previously unseen artifacts.

We embarked on identifying causes. We began by testing performance under low, medium, and high load on three groups of devices:

- Weak: Huawei Honor 9 Lite and Motorola Moto g stylus (2022);

- Medium: Sony Xperia 10 VI and Huawei Honor View 20;

- Strong: Xiaomi Pad 5 and Google Pixel 6A.

Through tests, we identified two key points:

- Load is directly proportional to progress;

- We can’t fix FPS drops due to project features.

Based on this, a temporary decision was made to cap FPS at 30 frames to make frame drops and stuttering less noticeable to players.

Interestingly, new testing revealed visual bugs on Motorola Moto g stylus that we hadn’t identified on other devices before.

We initially deemed the issue specific and began searching for similar CPU and GPU devices. We chose another device on the same MediaTek base. A similar fault was found there as well.

It became clear that we had a MediaTek-related processor operation issue. The development team successfully resolved it.

Post-Production with the Released Game

No project, no matter how excellent, escapes negative feedback post-release. So our next step was to review those comments.

A bot, which sends all reviews to a dedicated channel in our work messenger, as well as Amplitude, helped us here.

The bot allowed filtering of values described in comments and finding the suspected user who may have encountered the problem.

Next, it was a matter of analyzing the user's profile and their in-game actions history. Based on this data, we could nearly be 100% sure it was the correct user.

Ultimately, we arrived at a scheme where we identify the user's nickname, locate them on the game servers, copy their history of interactions with the game, and thoroughly investigate to resolve the bug.

Conclusion

Through external testing efforts, the client:

- Was relieved from independently creating and overseeing a QA team;

- Achieved greater transparency in testing processes and documentation maintenance (e.g., understanding progress in checklists and functional test cases, what was done and what wasn’t);

- Received clear pre-release test reports, allowing the development team to wisely prioritize error fixes;

- Obtained launch process support in terms of feedback control and bug processing.

Certainly, it’s impossible to cover everything we encountered in one article, but we aimed to highlight the key points. We hope the experience from the SunStrike QA team was helpful for you. Successful releases!