Researchers release MarioGPT model to generate Super Mario Bros. levels from text prompts

A group of Danish researchers have created a model to generate Super Mario Bros. levels. Here is how MarioGPT works and what limitations it has.

What happened?

- As spotted by TechCrunch, a group of researchers at IT University of Copenhagen released MarioGPT, a fine-tuned GPT2 model described as the “first text-to-level model.”

- They published a paper describing the model and its features, also posting MarioGPT on GitHub. It was trained on Super Mario Bros. and its sequel, The Lost Levels, to help generate tile-based levels for the game using a text prompt.

- Unlike previous Mario generators, MarioGPT relies on a generative AI rather than on assembling levels from pre-created tilesets.

- Nintendo hasn’t reacted to this project yet. However, its lawyers could potentially have issues with it, given the company’s history of copyright disputes.

How does MarioGPT work?

- MarioGPT is based on the DistilGPT2 language learning model (LLM). However, it can’t understand Super Mario Bros. levels natively, so they first have to be rendered as text.

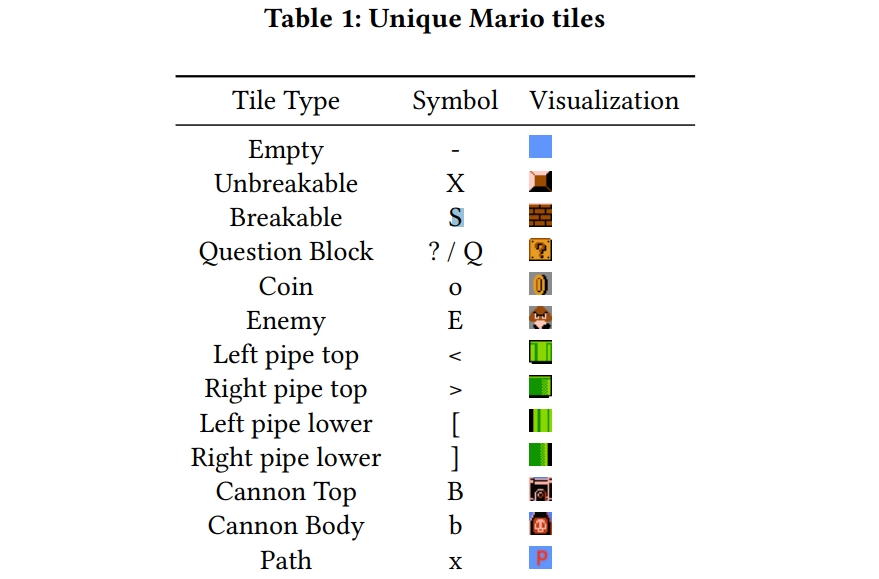

- This allows the model to predict the next token sequences. The levels are represented as tiles of strings, which encode certain in-game objects. For example, a question block is represented as “? / Q”, breakable tiles as “S”, enemies as “E”, and coins as “o”.

Mario tiles represented as symbols for MarioGPT

- After that, MarioGPT will be able to understand the patterns and reproduce them in the form of actual in-game levels.

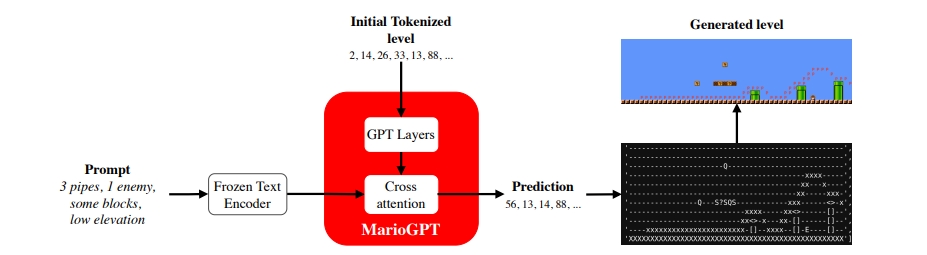

MarioGPT prediction pipeline, from simple text prompt to a generated level

- One of the researchers on the team, modl.ai co-founder Sebastian Risi, explained on Twitter that to incorporate prompt information, “we utilize a frozen text encoder in the form of a pretrained bidirectional LLM (BART), and output the average hidden states of the model’s forward pass.”

MarioGPT is a finetuned GPT2 model that is trained on a subset of Super Mario Bros levels. To incorporate prompt information, we utilize a frozen text encoder in the form of a pretrained bidirectional LLM (BART), and output the average hidden states of the model’s forward pass. pic.twitter.com/H6ZSPUFgdF

— Sebastian Risi (@risi1979) February 14, 2023

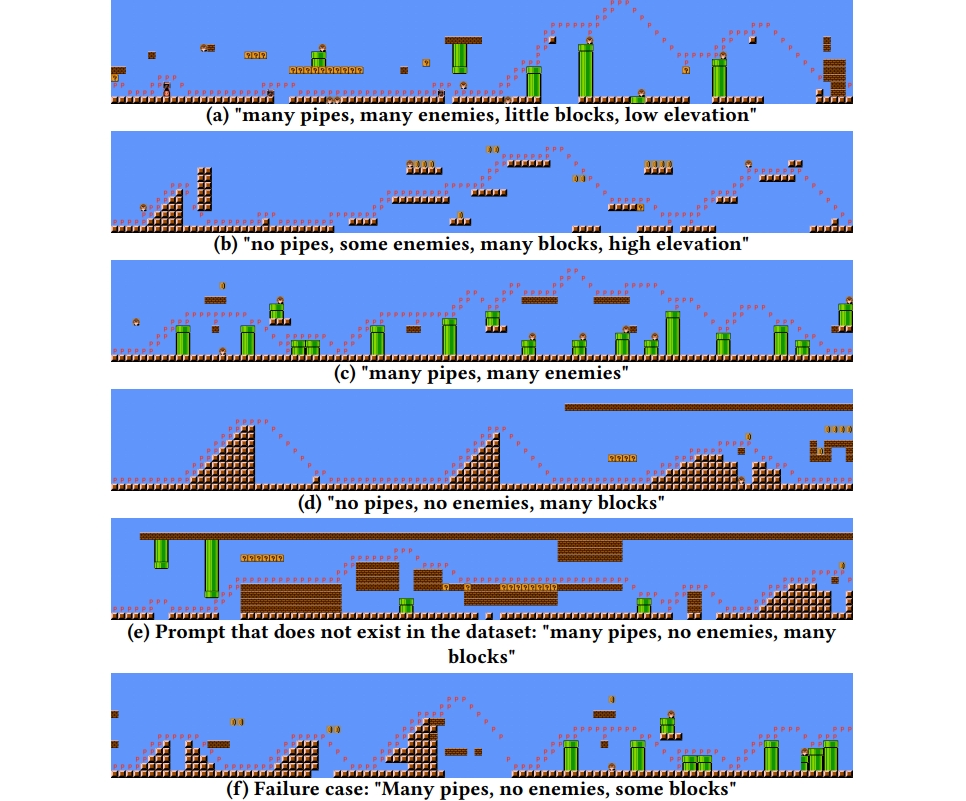

- In most cases, MarioGPT can successfully generate in-game levels from various text prompts (e.g. “no pipes, no enemies, many blocks” or “many pipes, many enemies, little blocks, low elevation”). However, failures can occur rarely, so the model is not perfect.

Examples of Super Mario Bros. levels generated using MariorGPT; (f) is a failure case

- “I think with small datasets in general, GPT2 is better suited than GPT3, while also being much more lightweight and easier to train,” the paper’s lead writer Shyam Sudhakaran told TechCrunch. “However, in the future, with bigger datasets and more complicated prompts, we may need to use a more sophisticated model like GPT3.”

Thanks for reading! Here are some videos of an A* agent enjoying MarioGPT-generated levels. Enjoy! 😄 pic.twitter.com/mZJdp2WV83

— Sebastian Risi (@risi1979) February 14, 2023