How AI-assisted RPG Tales of Syn utilizes Stable Diffusion and ChatGPT to create assets and dialogues

Tales of Syn is an isometric RPG in the style of classic Fallout titles. The twist is how it uses the power of Stable Diffusion and ChatGPT to create game assets, backgrounds, character models, and dialogues.

What is Tales of Syn?

- Developed by UK artist Hackmans, Tales of Syn started gaining attention last month. The game will also be featured at the upcoming Culture AI Film and Games Festival 2023.

- Tales of Syn is a video game and comic book set in the world of Giga Bloc C. It is described as a “sprawling metropolis in a future time period of Earth.”

- This is a classic cyberpunk dystopian world, which combines high tech and lowlife. The player will explore both the upper affluent levels, controlled by the Echelon Corporation, and the poor low levels, governed by rival factions.

- “A core aim of the project is to test emerging AI technologies to see how they can help augment the design process both in production and also aiding narrative story telling,” the About section reads.

- So what really caught our attention was a series of blog posts by Hackmans detailing character creation and other aspects of AI-assisted development. This information might be really useful for other developers, especially thanks to step-by-step breakdowns.

Tales of Syn, an isometric RPG I’m developing as a narrative adventure linked to my comic book. Also exploring the use of AI tools and workflows to see what makes sense in production and if any value is added to the player experience #madewithunity #gamedev #indiedev #unity3d pic.twitter.com/3qskhxhHjO

— -=HACKMANS=- (@_hackmans_) February 11, 2023

How is Stable Diffusion used in Tales of Syn?

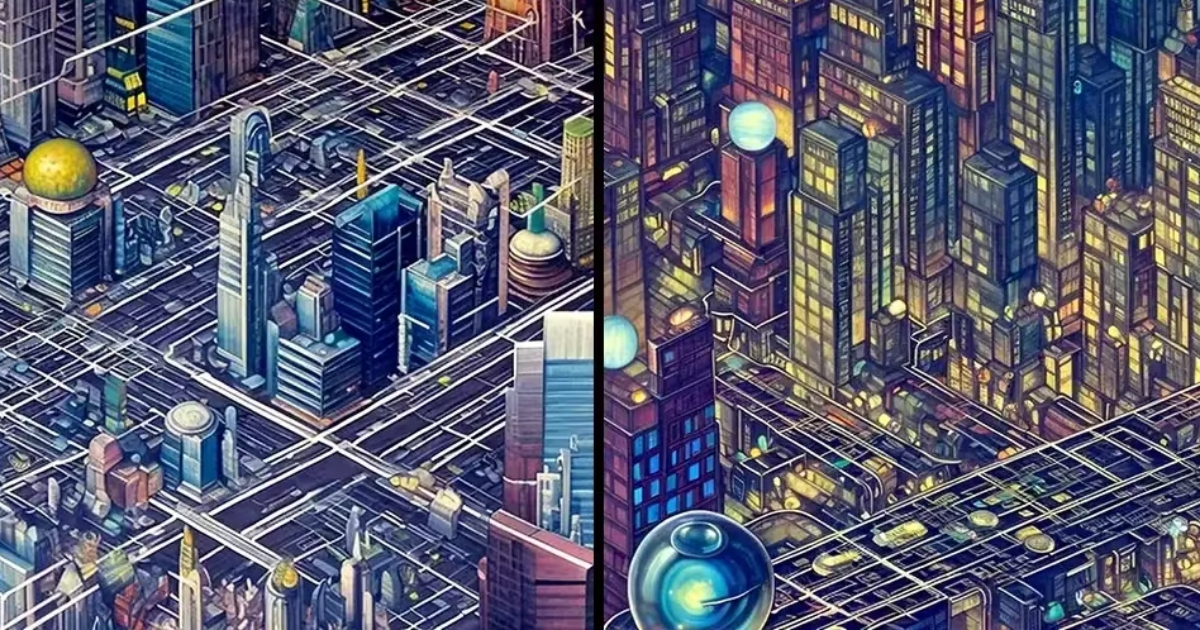

- Hackmans first decided to fine-tune a custom model based on aerial photography to create some concepts for isometric views of cityscapes.

- The artist used Google Earth footage of Asian cities and created a dataset of eight images cropped to 512×512. Then Hackmans trained it as a style using Joe Penna’s Dreambooth iteration and chose Stable Diffusion 1.5 as the base model.

- The first generated results already looked like promising concepts, but lacked image quality and clear road lines.

Top: Some images from the original dataset of Google Earth photos; Bottom: Two examples of the first generated city concepts

- Another issue was that Hackmans couldn’t generate any painterly style outputs. So the artst decided to play with Automatic 1111, an open-source UI tool for Stable Diffusion, and its Prompt Editing features.

- “By starting the generations in the trained style for the first few steps and switching to oil painting or similar art modifiers it provided a much wider variety of style outputs which still retained the aerial isometric look,” Hackmans noted.

- Here are some results generated using the following prompt: “futuristic city street, metropolis, dystopian, in [

:vibrant oil painting:0.25]” - According to Hackmans, better results can be achieved by using higher quality dataset images and more dataset examples.

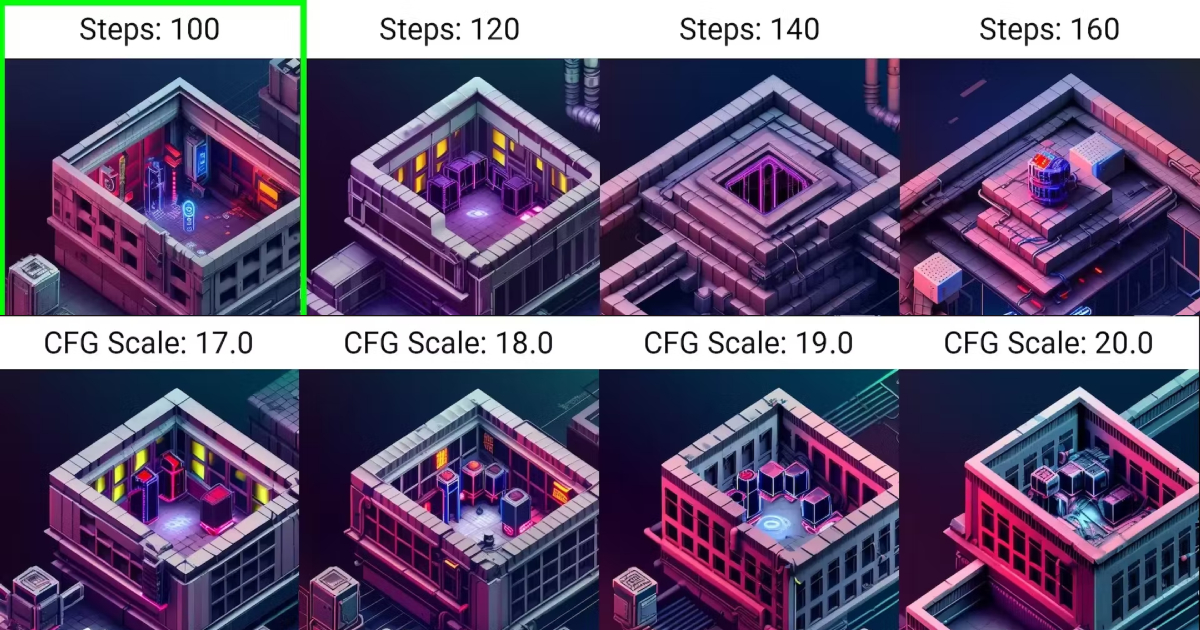

- Hackmans also used Stable Diffusion to create isometric backgrounds for Tales of Syn. The idea was to write detailed prompts, experimenting with the number of steps and different settings for the classifier-free guidance scale (CFG scale). The latter adjusts how close the output image looks to the prompt and input image.

- The first decent results were achieved when Hackmans increased the number of steps to the 100s and set CFG to 15-30.

Different outputs for different steps and CFG scale settings

- For editing the output images, Hackmans used Photoshop to paint out strong colors and then put these backgrounds in Unity. The goal was to remove all the lighting and then add it using custom shaders in the engine.

- In the blog post, Hackmans also described different methods they used to create depth and normal maps, as well as the process of creating shadows for the in-game scene and upscaling the output image so it can be used as the background.

- When it comes to character creation, Hackmans tried to find a way to turn Stable Diffusion-generated images into 3D models. These characters should be in the style of the game world and remain consistent throughout the entire pipeline.

- First, Hackmans drew outlines for the character with Artstudio Pro over a proportion reference guide they found online. Then the artist added some colors and basic shading to “inform the clothing materials and details when processing the image with Stable Diffusion.”

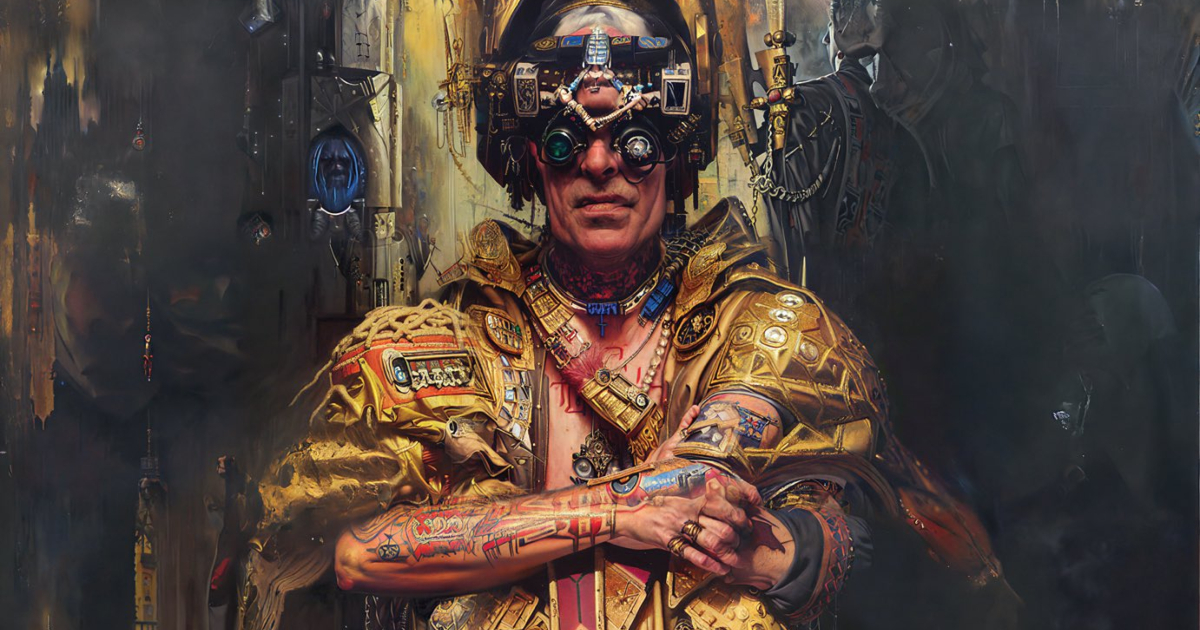

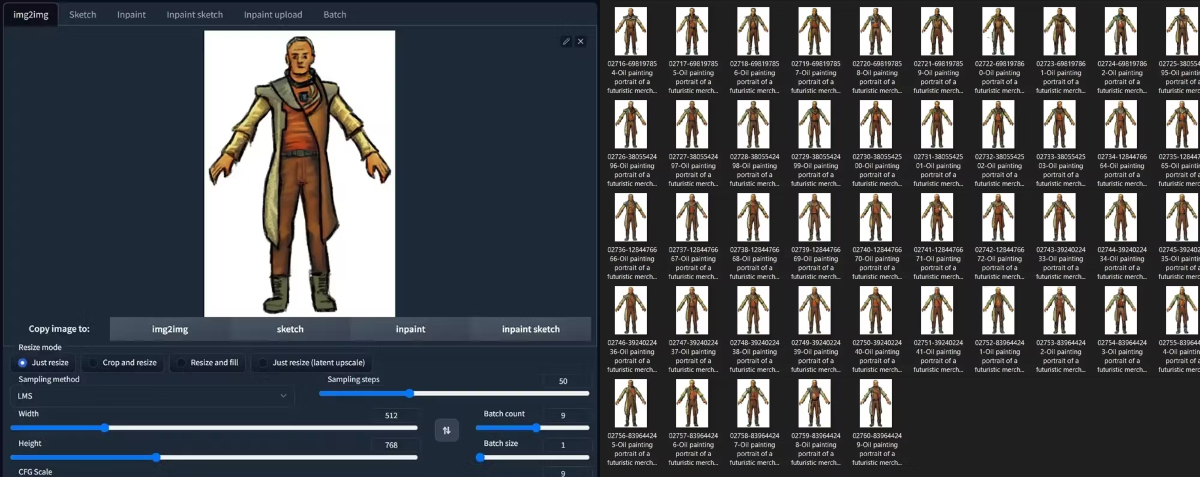

- Hackmans put these sketches in the img2img generator in Automatic 1111 to get dozens of oil painted portraits of a futuristic merchant.

- After that, Hackmans picked the best outputs and tuned them in Photoshop by layering the images on top of each other and adding certain details or removing specific regions.

- One of the problems with AI-generated images were terrible hands, so the artist also had to fix it later.

Raw sketches (top) compared to the final result (bottom)

- The next step was to load the image into Spotlight, a projection texturing system in ZBrush, and form the main shapes using the DynaMesh mesh generation tool.

- The following process involved sculpting, the creation of maps, and other 3D modeling stuff. “I achieved a workflow that met my initial goals of generating 3D characters from Stable Diffusion images, which were in keeping with the style of my comic,” Hackmans wrote.

How does Tales of Syn utilize ChatGPT?

- Hackmans uploaded a five-minute clip on YouTube to show how the player will be able to interact with non-playable characters.

- The game allows you to write text inputs in the dialogue window to trigger certain answers from the NPC. The process is built on the GPT-3 language model.

- In the footage, the player asks the merchant what he sells and what weapons he makes, and then details their queries with more specific prompts such as “Do you have a tool that can hack into a network?” or “Can you teach me how to use the hacking tools?”

- Interestingly, the merchant’s voice is synthesized using ElevenLabs’ AI-voice generation tool.

- Hackmans also used ChatGPT to write some Unity scripts. “I used it a bit for my character navigation, but you still need to have some knowledge so that you can ask it to correct certain things or improve the scripts,” the developer noted on Reddit.

- It is worth noting that this NPC dialogue is only a prototype, so it is unclear what level of freedom the player will have in the final build or how this system will workout throughout the entire game.

More information about the AI-assisted character and background creation can be found in the full articles on the Tales of Syn website. Hackmans will also reveal more details about the game and its development at GDC 2023.