How AI Became the Secret Weapon in Game Testing — The SunStrike Case Study

The SunStrike team shared with App2Top their case study on implementing AI in the game testing pipeline.

Author — QA Department at SunStrike

Introduction

If you manage game development or are responsible for its quality, you know the daily life of a tester: checking functionality, working with documentation, updating cases, bug fixes, design changes — all simultaneously. Routine tasks consume 20-40% of the time, sometimes more.

In reality, a QA’s responsibilities are even broader. A tester is also involved in:

- sanity checks of new functionality;

- regression testing before releases;

- bug fix validation (ensuring nothing else broke after a fix);

- exploratory testing (e.g., what if a player does X during Y?);

- verifying in-game analytics;

- performance and stability testing;

- cross-platform and cross-browser testing.

And that's just part of the job. Each team adds tasks such as working with internal tools, builds, migration, SDK, and more. Often, these tasks have to be managed concurrently. The QA workload frequently turns out to be much larger than initially planned.

Critical Workload: From AAA to Indie

In large projects, work is usually carried out on dozens of features simultaneously, GDDs grow to hundreds of pages, and there are constant revisions and A/B tests on thousands of users. In such conditions, the QA department is overwhelmed: manually updating cases and reading documentation consumes a significant amount of time.

In smaller teams, it's even more challenging: one QA often fulfills the role of a whole department. They write documentation, test builds, and adapt daily to design changes. With such a high density of tasks, a specialist burns out quickly without automation.

The Pain Point: Manual Documentation Work

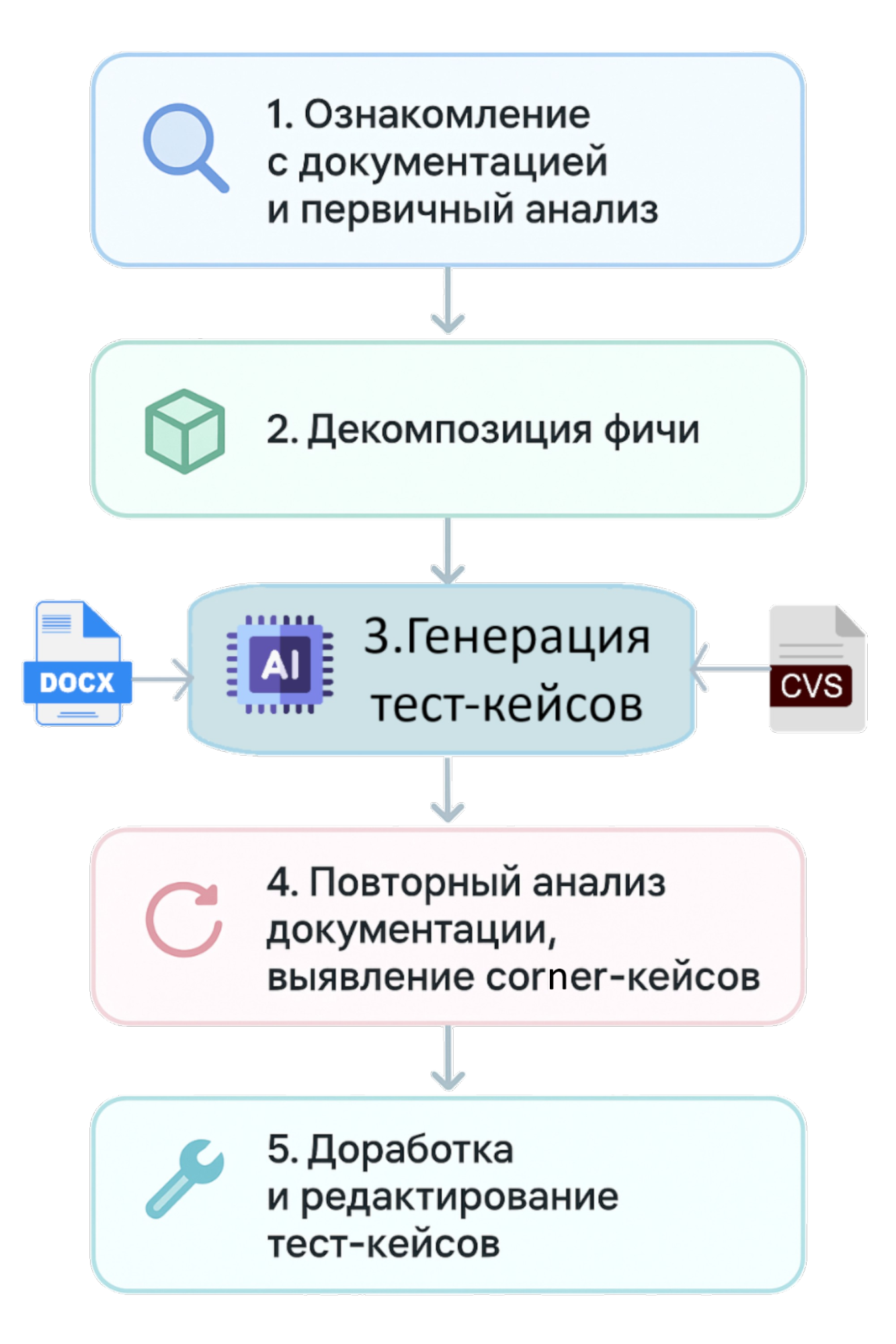

The testing cycle usually begins with documentation. The process, step-by-step, looks like this:

- familiarization with the GDD;

- decomposition of features;

- writing initial test cases;

- identifying edge scenarios;

- revisions and updates to the cases.

The Algorithm for Developing Test Documentation Manually

Which step is the most time-consuming? Of course, writing test cases. This is where AI can already be an excellent assistant today.

The Solution: AI as the First GDD Reader

What if all the preliminary work with the GDD (reading, analysis, case formatting) could be handed over to AI, allowing the tester to focus on what machines can't yet handle: creative scenarios, logic checks, and analytics?

To start, you will need:

- an AI assistant (such as ChatGPT, Claude, or Gemini);

- a prompt template;

- GDD (in .docx or .pdf format);

- a CSV file template.

It sounds simple, but there are nuances. Let's break it down with an example.

How It Works: Step by Step

1. Document Upload

A specific page of the GDD is loaded, such as the description of the "Crafting System," into the selected model.

2. GDD Analysis: Prompt

Before generating cases, AI needs to understand what it's analyzing. A sample request:

"Analyze the attached file CW_2.0.docx (page 5: ‘Crafting System’). Highlight:

- main functions (e.g., “Create item from resources”);

- strict rules;

- limitations;

- special conditions.

Confirm the list of functions before generating test cases."

3. Case Generation: Strict Requirements

After confirmation, you can request test cases in CSV format:

“Generate test cases for the 'Crafting System' function in CSV format, as in the example test.csv.

Text only in CSV format, without Markdown, headings, or explanations.

Each case includes a name, steps, expected result.

Example:

"Positive: craft item C from A+B”, “1. Open menu…”, “Item C appears in inventory””.

It's important to limit the amount of text output at once, dividing cases into sections rather than creating cases for the whole GDD at once — models work more efficiently with compact context.

4. Export to TMS

The completed text is copied, saved as a .csv, and then imported into a test management system (TestRail, Qase, Jira, etc.).

The output is a complete draft of test cases that can be used immediately after minimal revisions.

What Next? Manual Review!

Even with a good model, the draft needs manual refinement. AI doesn't know all the nuances of the project, can't see the current build, and can't predict a player's unusual behavior.

Therefore, the role of the QA specialist remains crucial:

- removing duplicate and irrelevant cases;

- adding non-standard and creative scenarios;

- ensuring cases align with the actual game build.

The Algorithm for Developing Test Documentation with AI

Where AI Fails: Common Issues

Here are some typical problems we've found:

- hallucinations: the model generated a case about a "wall piercing system" even though the GDD only briefly mentioned object destruction;

- duplication: about 20% of cases were repeated with different wording;

- lack of creativity: the generated cases did not include one describing the crafting system behavior during a jump — this bug was found later manually.

AI is good at "reading" and structuring but not "imagining" like a human.

Before and After Implementing AI

With manual work:

- reading GDD and writing cases took up to 30 hours;

- about 70% of this time was purely routine tasks.

After implementing AI:

- draft case generation — 30-40 minutes;

- revision and refinement — another 6-8 hours.

Total savings — up to 20 hours on one large feature.

The time freed up by QA specialists is spent on exploratory testing, creative scenarios, and manual testing of complex cases.

Conclusion: AI is Not a Magician, but an Effective Tool

Artificial intelligence does not replace the tester — it shifts the focus from routine work to analysis, non-standard scenarios, and quality.

Pros:

- faster preparation of test cases;

- scalable basic coverage;

- reduced team workload.

Keep in mind:

- results require manual checking and adaptation;

- creative scenarios are left to humans;

- duplicates and logical errors are possible.

Conclusion

AI is a tool. It quickly turns a GDD into a working draft, but the final quality depends on the specialists themselves: they are the ones who turn the draft into a polished diamond.

We apply this approach to live projects and continue to develop it. If you face similar challenges — we’d be happy to share our experience.